Enabling data security when using AI solutions

From personalized product recommendations to powering autonomous vehicles, Artificial intelligence is rapidly transforming industries, powering innovations, and shaping our daily lives. But AI isn’t as self-sufficient as it seems, and its capabilities are only as good as its access to the data.

As Generative AI systems become more sophisticated and data-hungry, they rely on vast amounts of sensitive unstructured data, from customer data to internal reports, to function effectively. This increases the risk of misuse, exposure, or breaches, making robust data security not just important, but essential for safe and responsible AI adoption.

Recent surveys show that 96% of organizations are building governance for generative AI, and 82% worry about data leakage from these tools. For business leaders, the question is how to adopt AI tools into their business processes without exposing corporate secrets, confidential data, or customer information.

How AI solutions can compromise data security

Ensuring data security when using AI isn’t just about preventing breaches, it's about understanding how sensitive information can unintentionally leak through everyday use. As AI becomes embedded into business workflows, risks arise not only during development and deployment, but also in how employees interact with these tools and how AI systems are structured to learn and respond.

For instance, an employee may paste confidential figures, customer information, or source code into a generative AI tool to get a quick analysis, unaware that this data could be stored, processed, or reused beyond their control. Meanwhile, AI models themselves can sometimes retain and reproduce fragments of sensitive training data. These aren’t edge cases, they’re systemic risks born from normal use in the absence of proper safeguards.

Unlike traditional cyberattacks, these leaks often happen without anyone realizing it. A recent Gartner report highlights that, without strict protocols, AI chatbots can inadvertently expose private data stored in enterprise systems. That’s why securing AI starts with embedding clear policies, access controls, and monitoring into how these tools are adopted across the organization.

Unlike traditional data security, which focuses on safeguarding static datasets, AI data security must address unique challenges:

- High data volume requirements: AI models rely on large-scale data, increasing the surface area for potential leaks.

- Unverified data sources: Data used by AI typically comes from multiple source, which are unverified sometimes, complicating security efforts.

- Adversarial threats: Malicious inputs can manipulate AI models, leading to incorrect outputs or compromised systems.

- Expanded attack surface: The complexity of AI systems, including models, APIs, and cloud infrastructure, creates more entry points for attackers.

Together, these factors demand a shift in how organizations approach data security when using AI. It involves robust strategies that ensure data integrity, confidentiality, and compliance with regulations like the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA). Real-world incidents, such as the exposure of 38TB of Microsoft data by AI researchers, highlight the stakes involved.

5 practices to mitigate data risks when integrating AI

Data security concerns shouldn’t be considered a reason to avoid using AI, they’re a reason to use it wisely. As AI adoption becomes inevitable across business functions, the goal isn't to slow down innovation, but to integrate it responsibly. By taking proactive steps to secure sensitive information, businesses can unlock the full potential of AI tools, without exposing themselves to unnecessary risk. Here are five foundational practices to help you implement AI securely, at scale.

- Establish a clear AI data governance framework

Define ownership, policies, and workflows around every AI project. Classify data by sensitivity and map who can access it, under what circumstances, and through which approved AI endpoints. Embedding AI governance into your existing data-governance program ensures that every bot, model, or API you deploy aligns with your broader data-security mandates. - Leverage technical controls and monitoring

Encrypt all data in transit and at rest, enforce role-based access for AI tools, and use API gateways to centralize and log every AI interaction. Implement real-time scanning of prompts and outputs for sensitive keywords or PII (Personally Identifiable Information), and run periodic “red-team” exercises to test for leakage. By pairing encryption, access controls, and automated monitoring, you create a resilient defense against both accidental data spills and adversarial probing attacks. - Vet and manage third-party AI vendors

Treat any external AI service as you would a critical cloud vendor. Add SOC 2 or ISO 27001 certification as a requirement, insist on contractual guarantees that customer inputs won’t be used to retrain models, and prefer enterprise-grade or on-premises deployments that never share your data with public systems. Shadow-AI risks disappear when you offer safe, approved alternatives and enforce single-sign-on (SSO) or network restrictions. - Embed privacy-enhancing technologies

Whenever possible, choose AI solutions that support differential privacy, data anonymization, or federated learning. These techniques blur the link between queries and original data, reducing the effectiveness of model-inversion or membership-inference attacks, and helping ensure ongoing GDPR, CCPA, or other compliance. - Integrate AI into your risk and compliance programs

Expand your enterprise risk assessments and incident-response plans to cover AI-specific scenarios. Document every data flow into and out of your AI systems, conduct Data Protection Impact Assessments for high-risk use cases, and train your incident-response team to handle AI-related breaches alongside traditional cyber events.

How an iPaaS solution helps integrate AI Securely

As businesses adopt AI tools to improve workflows, from customer service automation to supply chain forecasting, they often run into a critical challenge: how to connect these tools to internal systems and data sources without compromising sensitive information. This is where and iPaaS (integration Platform as a Service) solutions significantly simplify AI integrations and data security.

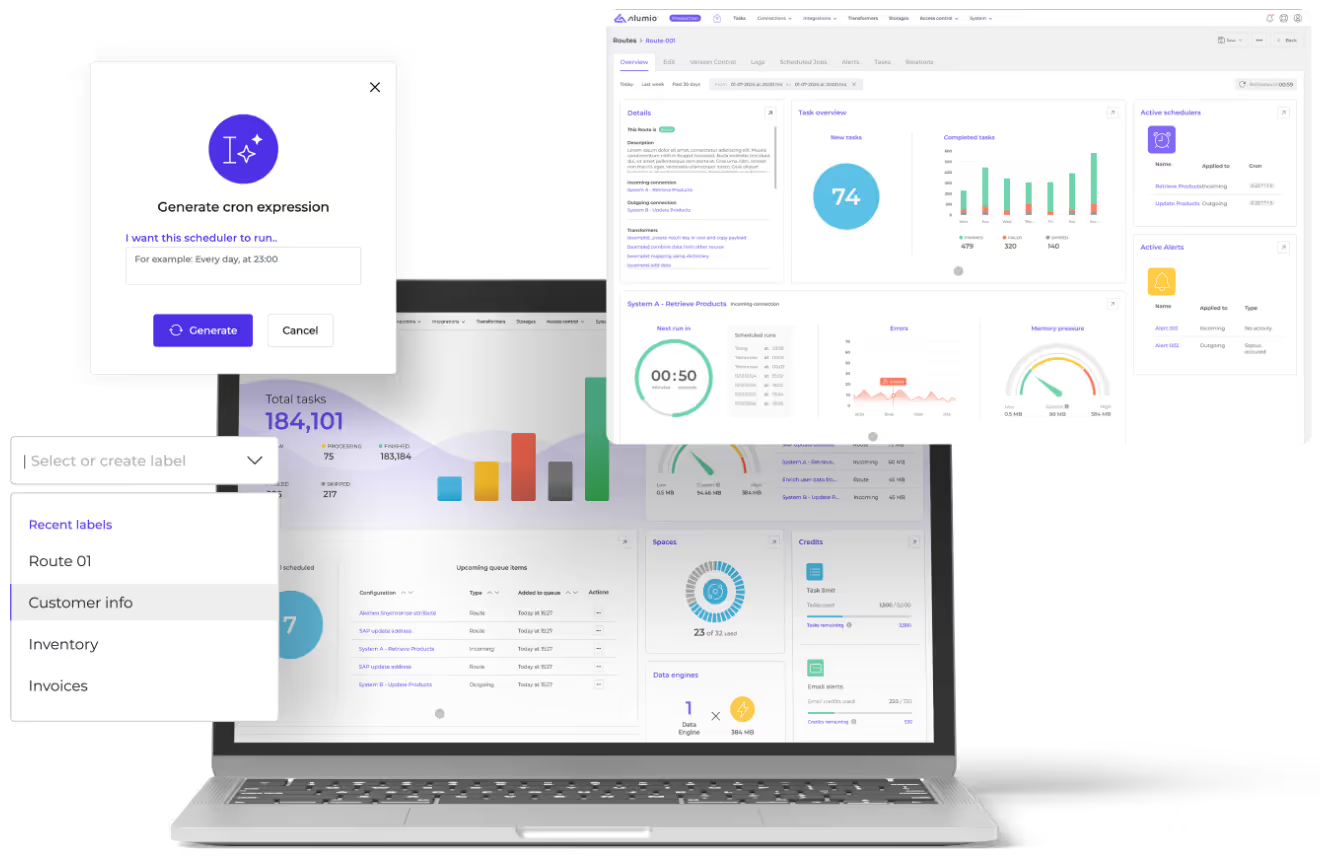

Alumio is an API-driven cloud-native iPaaS, or integration platform, designed to connect systems, transform data, and automate workflows across your IT landscape. It enables organizations to securely connect AI tools like OpenAI to ERP systems, e-commerce platforms, CRMs, and databases, without writing custom code or exposing raw data.

When integrating AI, an ISO 27001 iPaaS solution like Alumio already comes with several crucial in-built data security measures that help reduce data risks of AI usage in the following ways:

- Control access to data by using the iPaaS as a secure layer between AI tools and your core systems. Only approved and relevant data is passed through, with fine-grained permissions and role-based filters applied before any exposure.

- Monitor and log data in every data transaction, ensuring traceability and auditability for every prompt, input, or response passing through an AI-connected workflow.

- Centralize API connections to gain full visibility into which data is shared, with whom, and when, reducing the risk of shadow tools or unauthorized access.

- Enforce enterprise-grade authentication (SSO, token management, encrypted keys) so that AI tools can only access data and systems as permitted.

- Automate compliance checks by embedding validation steps within data routes to ensure every integration meets regulatory and internal standards.

In short, the Alumio iPaaS provides the infrastructure to connect AI tools securely and responsibly, so your teams can automate and innovate, without putting compliance or sensitive data at risk.

Embedding security into the DNA of AI Integration

Making data security effective in the age of AI means embedding safeguards into every phase of adoption, from how AI tools are chosen, to how they’re integrated, to how they’re used daily across teams. It’s not just about defending against external threats like breaches or adversarial attacks, it’s about building responsible systems from the inside out. This starts with clear policies: define what data can be shared with AI tools, educate teams on secure prompting habits, and apply strict guidelines for how public or third-party models are accessed.

Then, layer on the right technical controls. Classify sensitive data, enforce encryption, restrict AI access based on roles, and implement tools to monitor prompts and flag exposed personal or proprietary data. Most importantly, select AI tools and integration solutions like the Alumio iPaaS that prioritize enterprise-grade security. By implementing strong governance, technical safeguards, and vetted AI workflows, businesses can unlock the full potential of AI, without compromising compliance, customer trust, or IP.