1. How did you start building AI integrations?

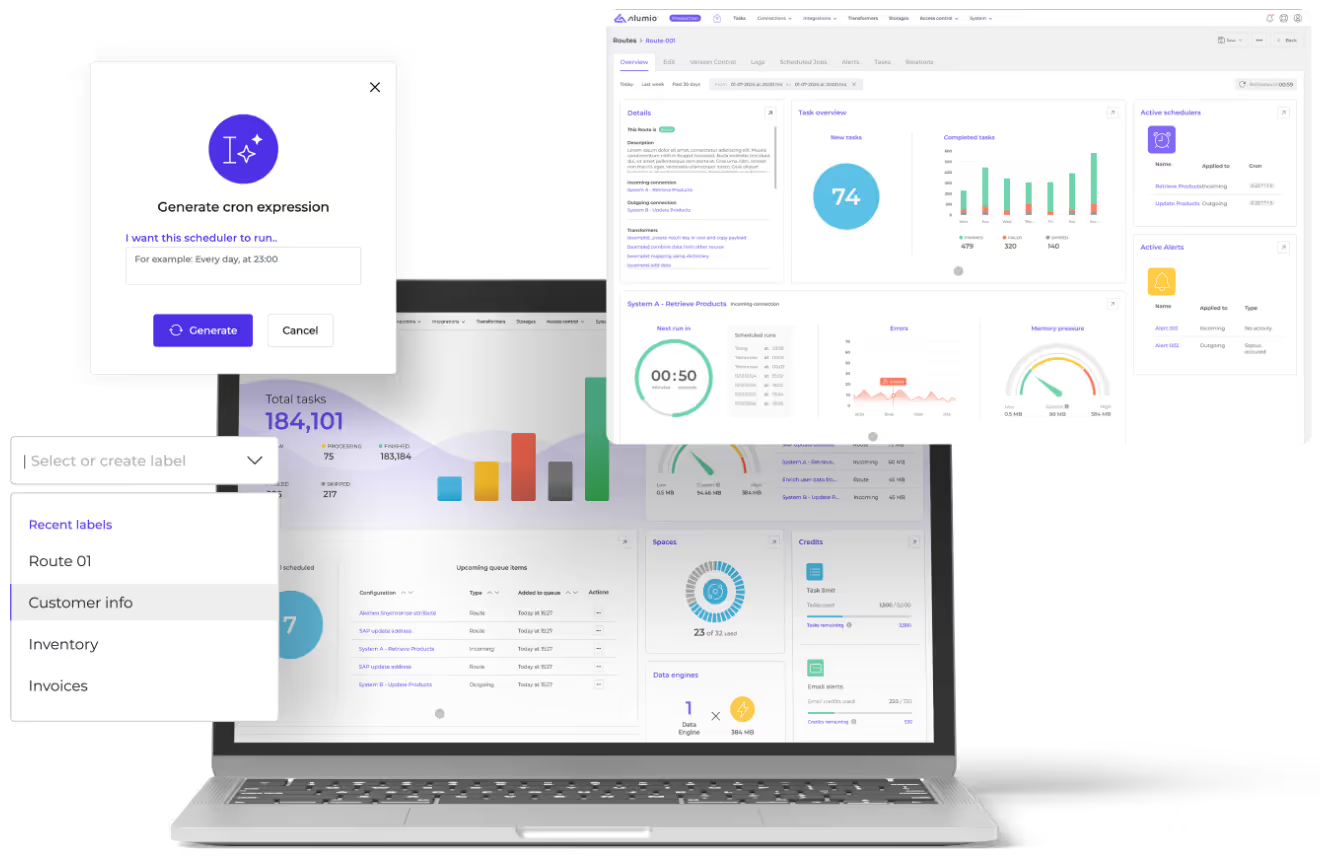

“Soon after the launch of ChatGPT, I started experimenting with integrating AI using the Alumio integration platform (iPaaS). The results with the early GPT models weren’t great, but seeing future potential, I initially used it to support my process of creating workflows within Alumio.

My first project in building an AI integration came about when I was advising a client against using a translation tool for their e-commerce website, which was too technical and problematic in the long term. Instead, I proposed an AI-driven alternative using the Alumio iPaaS, which we were already using to help them integrate Akeneo, their PIM (Product Information Management) system.

After exploring several AI solutions, I ended up on Google’s Vertex AI platform and chose their Gemini models for the integration project. Translating the products from English or Dutch into other languages with Gemini was easy and fast. Using the Alumio iPaaS to integrate AI with the client’s Akeneo PIM system was also relatively simple and straightforward.

The integration flow we built within Alumio involves:

a) Retrieving product data (in English or Dutch) from the Akeneo PIM into Alumio.

b) Mapping the data within Alumio to be sent to the Gemini AI solution.

c) Sending the attributes of products that need to be translated to the Gemini AI model via Alumio.

e) Receiving the translations from Gemini and saving them on the specific scope of that language.

In roughly 16 hours, we translated 12,000 products with this AI integration. We saved the client around €500–600 in monthly fees that the other translation application would have cost them. The return on investment was achieved in just three months.”

2. How does an iPaaS help with AI integrations?

“When using an iPaaS like Alumio, adding AI into the integration flow is relatively simple and actually aligns well with how the iPaaS already works. Typically, the iPaaS pulls data from one system, transforms it into a structured format, and pushes it to another system. When you introduce AI, the process is nearly identical: you fetch the data, structure and map this data in a way the AI expects, send it into the AI model/black box, receive a response, and then process or Route that response accordingly.

There are two ways that AI can be effectively used in combination with an iPaaS: the first is more challenging as of now, and it involves using AI to improve integration development itself within the iPaaS, and the second involves integrating AI to enhance product capabilities.

- Improving integration development with AI: Where the first use case is concerned, I feed the AI tool with my strategies and templates for how I build integrations using Alumio. In response, the AI helps generate a basic implementation of these strategies, wherein it won’t build the full mapping or apply business logic, but it will give me the foundational setup. For instance, it will give me the incoming configuration to fetch data from Akeneo and include logic to store timestamps. This way each run retrieves only new or updated data. In other words, it helps quickly generate a skeleton for Routes and this can expedite the early stages of integration setup.

- Integrating AI with other tools or apps: On the product side, integrating AI via the Alumio iPaaS can help automate complex tasks like translating product data (as explored in the previous example). AI is particularly effective in structuring unstructured data. For instance, one proof-of-concept that we’re currently running involves automating the processing of purchase orders received as PDFs via email. The customer wants to automatically import these purchase orders into their ERP. While some of their suppliers send structured EDI data that easily integrates with their ERP, many simply email PDFs after a verbal order. Traditional OCR tools that could be used to read PDFs require a unique template for each supplier, which constantly breaks with layout changes. To solve this, we set up a system where suppliers email PDFs to a dedicated inbox. We then built an integration for the Alumio iPaaS to retrieve the PDF orders up from this inbox and send it to the Gemini AI tool, while enriching the request with contextual data from the ERP (such as, the full supplier catalog). Gemini cross-references this ERP data and then extracts specific data from the PDF, like supplier names or order details, allowing us to create structured purchase orders automatically.

Overall, while Alumio already speeds up how we build integrations and automate processes for our customers, adding AI definitely helps boost efficiency on both fronts.”

3. When building AI integrations, what's the margin of error?

“Most generative AI errors depend upon how much contextual information the AI receives. For instance, in the previous example, where we used Alumio to build an integration to send supplier PDFs to Gemini for enrichment, we also modified the data exchange to include contextual data from the ERP (full supplier catalog). This allows the AI to make smart associations when turning the supplier PDFs into purchase orders. For instance, if a PDF lists “BR Green” as the supplier, the AI can understand that it likely refers to “Brothers Green” in the ERP. So adding more contextual information definitely reduces the chance for error.

To reduce the margin of error with generative AI, we typically combine our AI integrations with a user interface, which displays what content the AI generates and enables users to review it before allowing it to go live. We also configure Alumio to perform checks in the background, for example, flagging discrepancies if the price on the order doesn’t match what the ERP expects. The level of oversight depends on the use case, with some businesses being okay with pushing AI-translated product data straight to their webshop without reviewing it. Others insist on manual approval before publishing. But for critical processes like purchase orders, where errors can have financial impact, validating what the AI generates is essential.”

4. What are the biggest challenges when it comes to integrating AI?

“The biggest challenge right now is that most AI models are still fundamentally built on large language models (LLMs). Even on the API side, they’re primarily designed for chatbot-like interactions, which isn’t how we typically use them in integrations. Features like function calling or structured outputs do exist (which, for example, Google’s Gemini supports). Yet, we often still receive plain text responses that contain a JSON object, rather than receiving proper structured JSON directly. This reflects how these models are still rooted in conversational design, even when used for backend processes.

This has caused a fair share of issues for us, especially when it comes to deserializing responses. The models can understand code quite well, but they still generate it as raw text. This means they can forget a comma, miss a curly bracket, or make other small syntax errors that can break a process. Handling these inconsistencies takes some work early on, but the models are improving rapidly and are already much more reliable than they were a year ago.”